Learn libp2p in Rust

“The Rust Implementation of the libp2p networking stack.”

https://github.com/libp2p/rust-libp2p/blob/master/libp2p/src/tutorials/ping.rs

Peer-to-peer networking library

libp2p is an open source networking library used by the world’s most important distributed systems

What is a swarm?

A swarm in libp2p represents a group of peers that can communicate over a P2P network. It provides the necessary abstractions for connection management, message routing, and custom network behaviour.

Build a Swarm by combining an identity, a set of Transports and a NetworkBehaviour

[dependencies]

libp2p = { version = "0.54", features = ["noise", "ping", "tcp", "tokio", "yamux"] }

futures = "0.3.30"

tokio = { version = "1.37.0", features = ["full"] }

tracing-subscriber = { version = "0.3", features = ["env-filter"] }- [

Multiaddr]: crate::core::Multiaddr - [

NetworkBehaviour]: crate::swarm::NetworkBehaviour - [

Transport]: crate::core::Transport - [

PeerId]: crate::core::PeerId - [

Swarm]: crate::swarm::Swarm

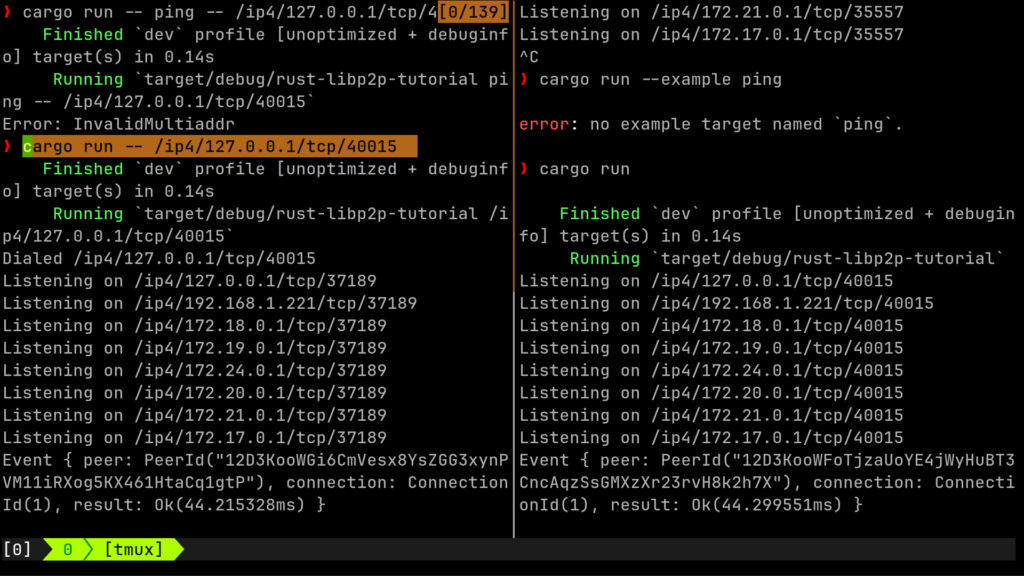

Demo the “ping” example

Run the first instance with cargo run

Run the second instance with cargo run — /ip4/127.0.0.1/tcp/40015

Where 40015 is what you have seen from the output of the first instance

Note: Port numbers are different each time, just ensure you use the port number of the first one, when you make the ping from the second one.

As per screenshot I had 40015 when I started instance 1 (seen in the right hand side), so that’s why I used it as the port to ping in the second (seen on left hand side):

cargo run -- /ip4/127.0.0.1/tcp/40015Demo the Basic distributed key-value store (Kademlia and mDNS)

https://pdos.csail.mit.edu/~petar/papers/maymounkov-kademlia-lncs.pdf

https://github.com/libp2p/rust-libp2p/tree/master/examples/distributed-key-value-store#conclusion

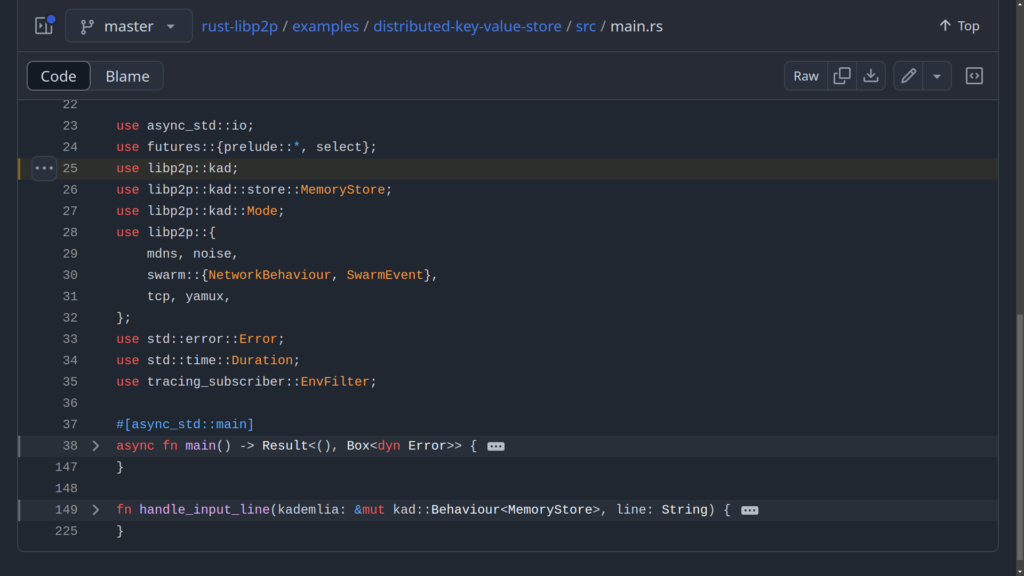

Kademlia is a distributed hash table (DHT) protocol used for decentralized peer-to-peer (P2P) networks. It allows nodes to efficiently locate data across the network by using a key-based system where each node is responsible for storing data associated with specific keys. Nodes in the Kademlia network communicate using a structured routing table that divides the network into logical regions. This allows for efficient lookups, even as the network scales. Each node maintains information about its neighbours, and as it receives queries, it routes them through the network toward the nodes that are most likely to hold the data associated with a given key. Kademlia is popular in decentralized applications due to its fault tolerance and scalability.

Multicast DNS (mDNS) is a protocol used to discover devices on a local network without needing a central DNS server. It enables nodes to advertise their presence and discover other nodes by broadcasting or multicasting queries and responses within the local network. mDNS is often used in local peer discovery, making it useful for small, ad-hoc networks. When used in conjunction with Kademlia, mDNS helps peers discover each other within a local network, allowing them to establish connections and then switch to Kademlia for routing and data lookup once the network is established. By combining both, your custom network behavior takes advantage of mDNS for quick peer discovery and Kademlia for scalable, decentralized data storage and retrieval.

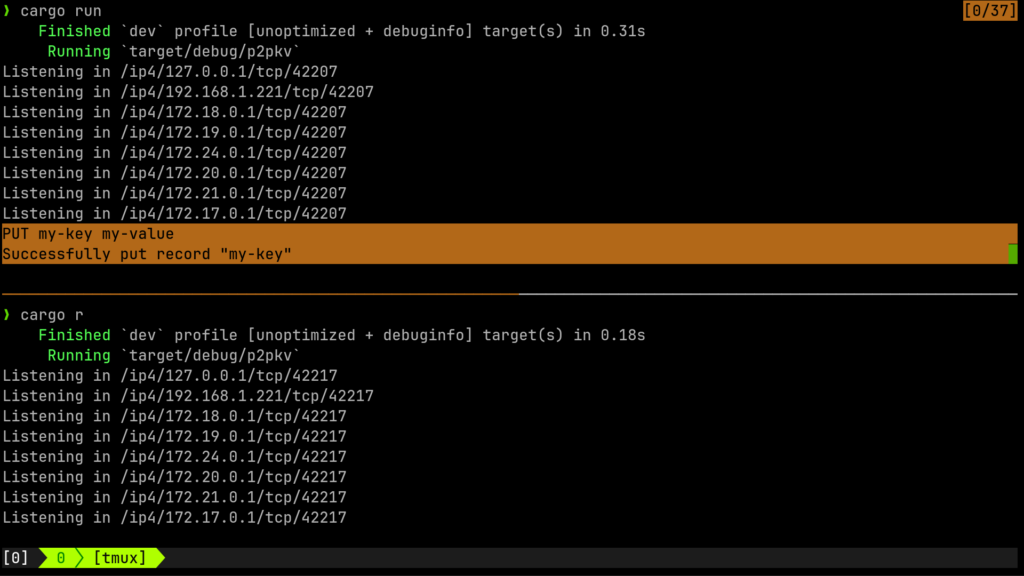

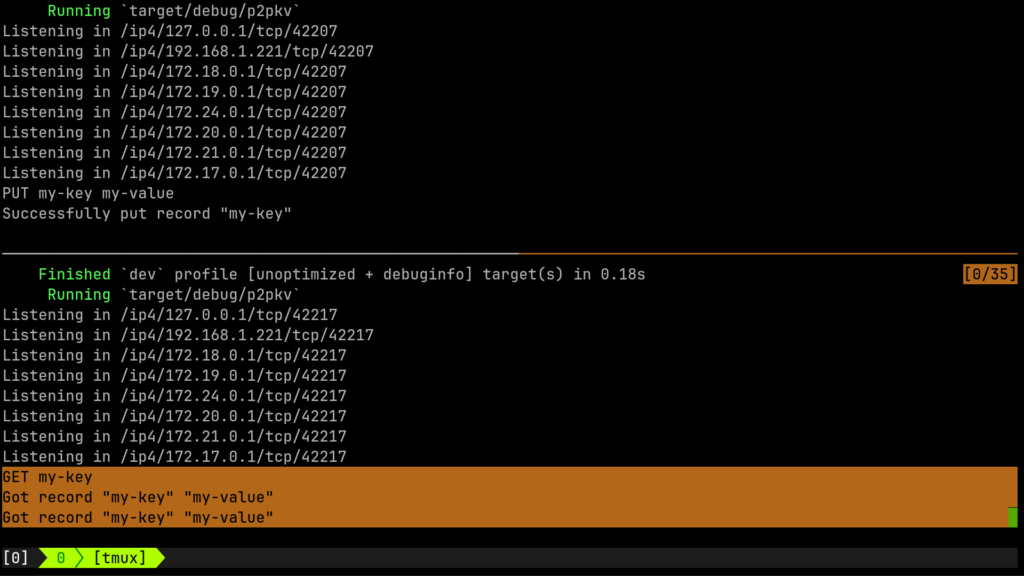

Demo the example code :

How to Set Up Public libp2p Connections

To allow a remote client to connect to your libp2p node deployed on a cloud provider, follow these steps:

1. Set Up Your Cloud Environment

Choose a cloud provider (like AWS, Google Cloud, Azure, DigitalOcean, etc.) and set up a virtual machine (VM) or container. Ensure that the VM has:

- A public IP address or domain name.

- Open network ports for the protocols you plan to use (e.g., TCP port 4001 for

libp2p).

2. Deploy Your libp2p Node

- Transfer your

libp2papplication to the cloud VM. You can usegit,scp, or any file transfer method. - Run your

libp2pnode application, ensuring it listens on the public IP address of the VM. You can modify your listen address to be explicitly set to the public IP.

Example of Setting Up a Node:

// Assuming you have a public IP address of your cloud VM

let public_ip: &str = "YOUR_PUBLIC_IP_ADDRESS"; // Replace with your public IP

let listen_addr: Multiaddr = format!("/ip4/{}/tcp/4001", public_ip).parse().unwrap();

Swarm::listen_on(&mut swarm, listen_addr).unwrap();3. Open Firewall Rules

Ensure that the VM’s firewall rules allow incoming traffic on the specified port (e.g., TCP port 4001). Here’s how to do this on common cloud providers:

- AWS: Update the Security Group associated with your instance to allow inbound traffic on TCP port 4001.

- Google Cloud: Create a firewall rule that allows TCP traffic on port 4001 for the VM instance.

- DigitalOcean: Add a rule to allow TCP traffic on port 4001 in the Cloud Firewalls section.

4. Remote Client Configuration

- The remote client must have the necessary libraries and dependencies to connect to your

libp2pnode. - In your client application, specify the multiaddress of your cloud-hosted

libp2pnode using its public IP.

Example of Client Connection:

let peer_id_str = "YOUR_PEER_ID"; // Replace with the peer ID of your node

let multiaddr = format!("/ip4/YOUR_PUBLIC_IP_ADDRESS/tcp/4001/p2p/{}", peer_id_str);

let addr: Multiaddr = multiaddr.parse().unwrap();

// Connect to the remote peer

swarm.dial(addr).unwrap();5. Peer Discovery (Optional)

- If you want the client to discover peers dynamically:

- Implement DHT (Distributed Hash Table) for peer discovery, allowing your client to find the node over the internet without knowing its address in advance.

- Alternatively, you can use a bootstrap node that all clients know and can connect to initially to get peer information.

6. Security Considerations

- Ensure that your

libp2papplication implements encryption (like the Noise protocol) to secure communications between the client and the node. - Consider using authentication mechanisms if sensitive data is being transmitted.

7. Test the Connection

Once everything is set up:

- Start your

libp2pnode on the cloud VM. - Run your remote client to connect to the node using the configured multiaddress.

- Monitor logs to verify that the connection is established successfully.

Example Output Check

You can add logging to both the server and client to verify the connection:

On the Server (Cloud Node):

println!("Listening on {:?}", listen_addr);On the Client:

swarm.on_connected(peer_id, _);

println!("Connected to {:?}", peer_id);By following these steps, you should be able to have a remote client successfully connect to your libp2p node hosted on a cloud provider over the public internet.