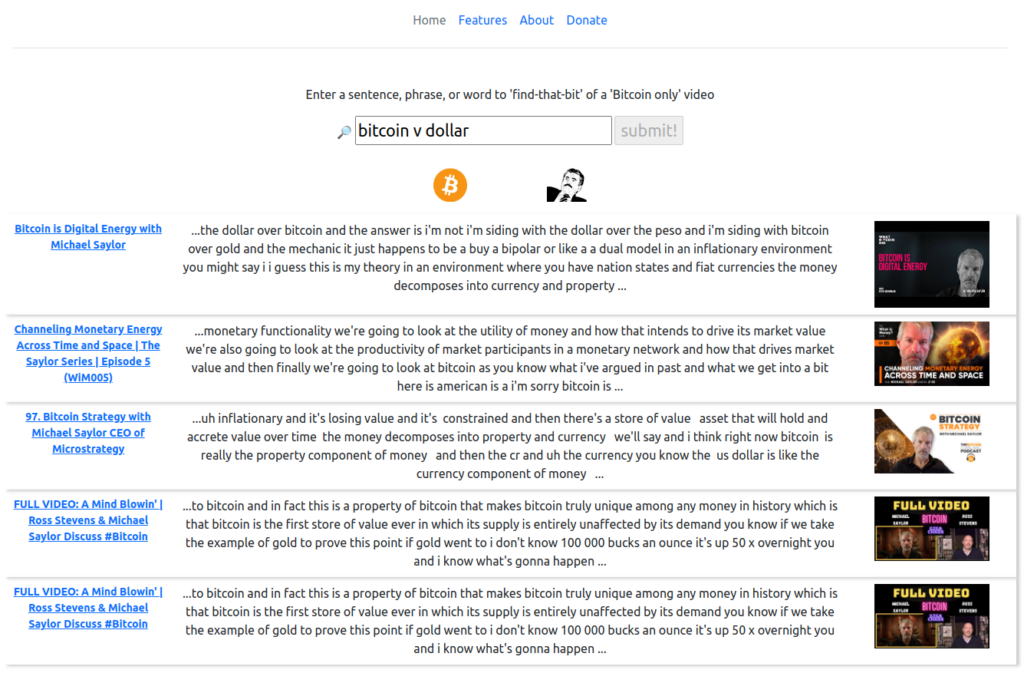

findthatbit.com – made with Qdrant

Watched a video about Bitcoin but forgotten the name of it or what part of it you liked?

With findthatbit.com you can search based upon meaning. If you remember a video was about bitcoin and there was talk of “seaside” property. Just search for “seaside” and you’ll get the link to where “miami beach” was discussed by Michael Saylor.

Introduction

The overall concept was to gather data in JSON format, encode it, and store in a vector database. I chose ‘qdrant’ as it caught my attention because it’s written in Rust and they had some very clear tutorials on their website.

I looked at creating an API in Rust, but for my MVP I thought I’d prove the idea first. The code to get FastAPI up and running is very straightforward.

The workflow can bee seen here on the Qdrant site

The 3 phases of the findthatbit project

- Extract, Transform and Load the data

- Create the API and JavaScript to query the data

- Front end

I chose to use the Qdrant docker container, and followed their quickstart: https://qdrant.tech/documentation/quick-start/

Depending on your hosting, you may need to make some updates to your server first to prepare it for Docker. Watch the YouTube video: Qdrant Vector Database for AI Project | Webdock Linux VPS 🐳

Much of the code for findthatbit.com was the same as the Qdrant guide. What was different?

Well, the Qdrant guide uses a “dataset” which when you check what kind of object it is in Python you will see it’s an Arrow dataset. See more about Arrow here: https://arrow.apache.org/docs/python/getstarted.html

I extracted my data as a sequence of JSON and saved as “subtitles.txt”

The crucial part here was to notice that it was just many rows of JSON

As an example, the 3 rows below are my “parsed_subtitles.txt”:

{'k1': 100, 'text': 'hello, this is sentence 1, 'k4': 4}

{'k1': 160, 'text': 3, 'quick brown fox': 5}

{'k1': 5000, 'text': 3, 'there is no second best, only Bitcoin': 3}I needed to convert this into an iterator to use with the Qdrant batch upsert feature, so after a bit of experimentation this was my solution:

fname = f"data/{upsert_id}/parsed_subtitles.txt"

df = pd.read_json(fname, lines=True)

print(df[:4])

print("dataframe loaded\n")

vectors = model.encode([

row.text

for row in df.itertuples()

], show_progress_bar=True)

print(vectors.shape)

np.save('startup_vectors.npy', vectors, allow_pickle=False)The key part here really is df.itertuples() it is what gets the ‘text’ values and allows them to be encoded ready to be stored in the Qdrant vector database.

In case you have also seen other vector databases, they have slightly different terminology:

Qdrant

vectors=vectors, payload=payload, ids=NoneOthers

embeddings, meta, idHow do you search the database once you have vectors in it?

As per the excellent qdrant examples, you can use their Python client to query, but you also must encode your query, as the similarity search is looking for a match between the encoded query and the encoded data..or rather ‘closest match’.

Conclusion

I’m not going to repeat the content from Qdrant’s site, so I’ll leave you with perhaps the most useful (for me) page : https://qdrant.tech/documentation/tutorials/neural-search/

findthatbit

Check it out : findthatbit.com